Describing Images to Visually Impaired Users

Jun 2020 - ongoing

Efficient and Effective Visual Content Description for Visually Impaired Users

Images are an increasingly used form of communication in traditional and new media. They support, complement, and sometimes substitute the information provided by the textual narration of a story; they enable and facilitate the analysis of complex phenomena; and, sometimes, they frame and contextualize a narrative, helping conveying emotions and opinions.

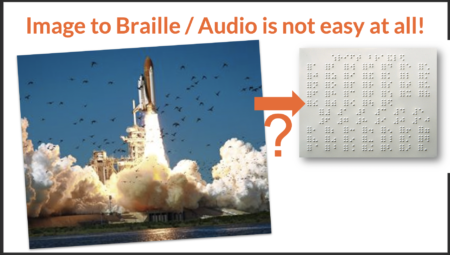

However, Images are usually not accessible to people with vision impairments. Arguably, this is mainly due to issues of cost, scalability, timeliness, and quality. Professional manual captioning can produce high-quality image descriptions, but the activity is time-consuming and, therefore, expensive. Even when good-quality manual captioning can be achieved at scale —for instance, thanks to volunteers or paid workers operating on. Web-based microtask platforms like — the large volume of images that are produced daily imposes severe limits on the timely availability of image descriptions. Recent work on automatic image description and captioning based on machine (deep) learning shows that it is possible to describe the content of images at scale, at a low cost, and in a timely fashion. Alas, the quality of the resulting annotations highly varies across domains and languages.

This project seeks to develop a better understanding of the relationship that exists between the information needs of visually impaired readers, their modes of interaction with different types of images (photographs, drawings, and infographics), and the technical capabilities of current Crowd Computing approaches for content analysis. The project will build upon previous works and insight of research projects by Dedicon and will adopt a mixed-methods approach combining state-of-the-art methods and tools in design and computer science.