Designing for Calibrated Trust for Acceptance of Autonomous Vehicles

Mar 2020 - ongoing

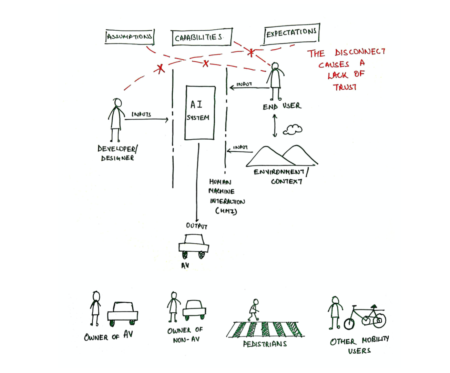

A research to the topic of human values that influence the acceptance of autonomous vehicles, in the context of urban locations. Specifically, diving into the trust building between autonomous vehicles and users.

In the past decade has seen car manufactures pour nearly 4 billion USD into the development and deployment of autonomous vehicles. This money was seen as an investment into the future of mobility with an optimistic release of fully automated vehicles in the early 2020’s. However, the development of autonomous vehicles has seen two prominent road blocks. The first is with regard to consumer acceptance (Bienzeisler, 2017) and the latter regarding regulations (Fagnant and Kockelman, 2015). The current project focuses on the aspect of consumer acceptance, more specifically building trust for increased acceptance. Below, barriers faced by the acceptance of autonomous vehicles have been highlighted:

1. Over reliance on machines (Mistrust) (Trimble, 2008)

2. Lack of trust in the capabilities of autonomous vehicles (Distrust) (Fraedrich and Lenz, 2014)

3. Specific risk of crashing (Daziano et al.,2016)

4. Non-autonomous vehicles traffic participants (Bazilinskyy et al., 2015)

5. System failure (Fagnant and Kockelman, 2015)

6. Breach of information and personal data (Fagnant and Kockelman, 2015)

7. Deprived from joy of driving (Fagnant and Kockelman, 2015)

The first two challenges to the acceptance of autonomous vehicles can be considered the two extremes of trust. Over reliance on machines (mistrust) can be seen as placing more trust as compared to the capabilities of the automated system. An example of this is people falling asleep at the wheel of autonomous vehicles Level-2 vehicles when driving on highways. On the other extreme lack of trust in the capabilities of autonomous vehicles or distrust is when people refuse to acknowledge the capabilities of the autonomous system. For example, user’s not trusting/using lane assistance or reverse assistance systems present in vehicles.

We can see that the other obstacles to autonomous vehicles (3-7) have an influence on the user trusting autonomous vehicles, leading to either mistrust or distrust depending on the outcome. However, it is important for trust between autonomous vehicles and user not be at either extremes (distrust or mistrust). There is a need for calibrating trust. Calibrated trust can be defined as “The process of adjusting trust to correspond to an objective measure of trustworthiness”. In simpler terms it is the ability to balance the capabilities of the autonomous system with the expectations of the end user. Research shows that calibrated trust allows for better implementation and acceptance of new technologies.

The project is in association with the “Cities of Things Lab” and “People in Transit” . The Cities of Things Lab, works on in the field of smart cities and the role autonomous artefacts play in this future. Whereas, People in Transit focuses on addressing the changing landscape of mobility.

References:

Bazilinskyy, P., Kyriakidis, M., de Winter, J., (2015). An international crowdsourcing study into people’s statements on fully automated driving. Procedia Manufact. 3,2534–2542.

Bienzeisler, L. (2017). Impacts of an Autonomous Carsharing Fleet on Traffic Flow. ATZ worldwide, 119(7-8), 60-63.

Daziano, R.A., Sarrias, M., Leard, B., 2016. Are consumers willing to pay to let cars drive for them. Analyzing Response to Autonomous Vehicles

Fagnant, D.J., Kockelman, K., (2015). Preparing a nation for autonomous vehicles: opportunities, barriers and policy recommendations. Transp. Res. Part A: Policy Pract. 77, 167–181.

Fraedrich, E., Lenz, B., 2014. Automated driving: individual and societal aspects. Transport. Res. Rec.: J. Transport. Res. Board 2416, 64–72

Fagnant, D. J., & Kockelman, K. (2015). Preparing a nation for autonomous vehicles: opportunities, barriers and policy recommendations. Transportation Research Part A: Policy and Practice, 77, 167-181.